by Zhen Liu

Up first: data preprocessing

Do you feel frustrated by breaking your data analytics flow when searching for syntax? Why do you still not remember it after looking up it for the third time?? It’s because you haven’t practiced it enough to build muscle memory for it yet.

Now, imagine that when you are coding, the Python syntax and functions just fly out from your fingertips following your analytical thoughts. How great is that! This tutorial is to help you get there.

I recommend practicing this script every morning for 10 mins, and repeating it for a week. It’s like doing a few small crunches a day — not for your abs, but for your data science muscles. Gradually, you’ll notice the improvement in data analytics programming efficiency after this repeat training.

To begin with my ‘data science workout’, in this tutorial we’ll practice the most common syntax for data preprocessing as a warm-up session ;)

Contents:0 . Read, View and Save data1 . Table’s Dimension and Data Types2 . Basic Column Manipulation3 . Null Values: View, Delete and Impute4 . Data Deduplication0. Read, View and Save data

First, load the libraries for our exercise:

Now we’ll read data from my GitHub repository. I downloaded the data from Zillow.

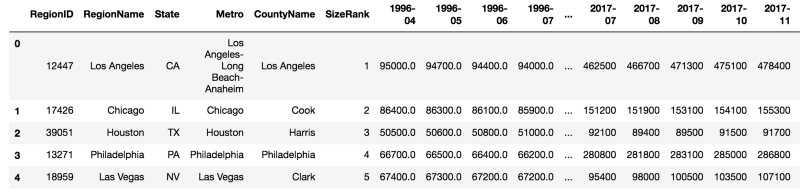

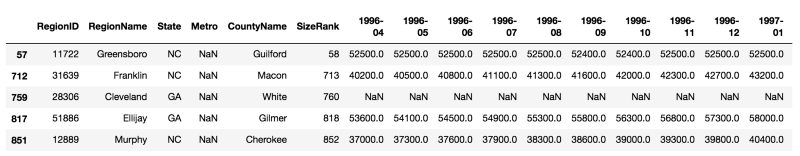

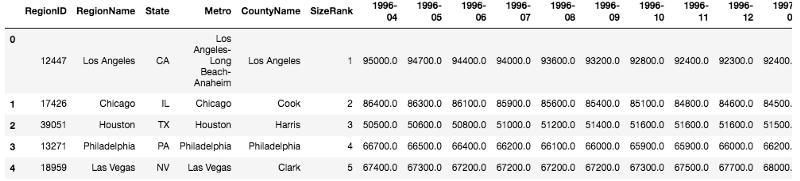

And the results look like this:

Saving a file is dataframe.to_csv(). If you don’t want the index number to be saved, use dataframe.to_csv( index = False ).

1 . Table’s Dimension and Data Types

1.1 Dimension

How many rows and columns in this data?

1.2 Data Types

What are the data types of your data, and how many columns are numeric?

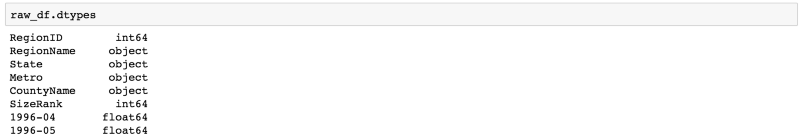

Output of the first few columns’ data types:

If you want to be more specific about your data, use select_dtypes() to include or exclude a data type. Question: if I only want to look at 2018’s data, how do I get that?

2. Basic Column Manipulation

2.1 Subset data by columns

Select columns by data types:

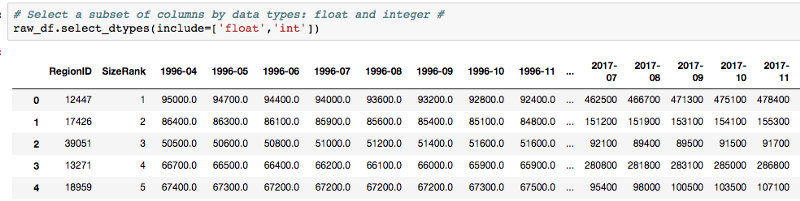

For example, if you only want float and integer columns:

Select and drop columns by names:

2.2 Rename Columns

How do I rename the columns if I don’t like them? For example, change ‘State’ to ‘state_’; ‘City’ to ‘city_’:

3. Null Values: View, Delete and Impute

3.1 How many rows and columns have null values?

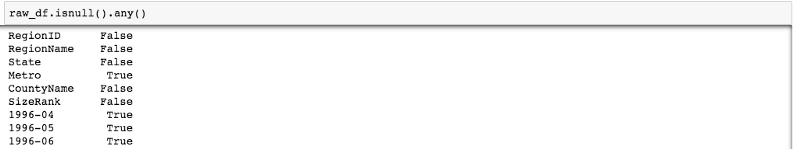

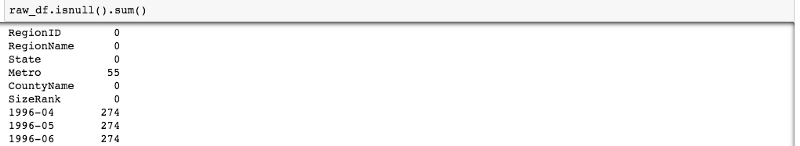

The outputs of isnull.any() versus isnull.sum():

Select data that isn’t null in one column, for example, ‘Metro’ isn’t null.

3.2 Select rows that are not null for a fixed set of columns

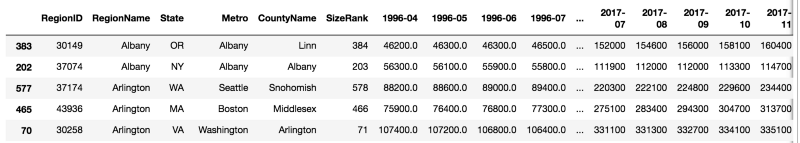

Select a subset of data that doesn’t have null after 2000:

If you want to select the data in July, you need to find the columns containing ‘-07’. To see if a string contains a substring, you can use substring in string, and it’ll output true or false.

3.3 Subset Rows by Null Values

Select rows where we want to have at least 50 non-NA values, but don’t need to be specific about the columns:

3.4 Drop and Impute Missing Values

Fill NA or impute NA:

Use your own condition to fill using the where function:

4. Data Deduplication

We need to make sure there’s no duplicated rows before we aggregate data or join them.

We want to see whether there are any duplicated cities/regions. We need to decide what unique ID (city, region) we want to use in the analysis.

Drop Duplicated values.

The ‘CountyName’ and ‘SizeRank’ combination is unique already. So we just use the columns to demonstrate the syntax of drop_duplicated.

That’s it for the first part of my series on building muscle memory for data science in Python. The full script can be found here.

Stay tuned! My next tutorial will show you how to ‘curl the data science muscles’ for slicing and dicing data.

Follow me and give me a few claps if you find this helpful :)

While you are working on Python, maybe you’ll be interested in my previous article:

Learn Spark for Big Data Analytics in 15 mins!

I guarantee you that this short tutorial will save you a TON of time from reading the long documentations. Ready to…towardsdatascience.com