by Yan Cui

How to choose the best event source for pub/sub messaging with AWS Lambda

AWS offers a wealth of options for implementing messaging patterns such as Publish/Subscribe (often shortened to pub/sub) with AWS Lambda. In this article, we’ll compare and contrast some of these options.

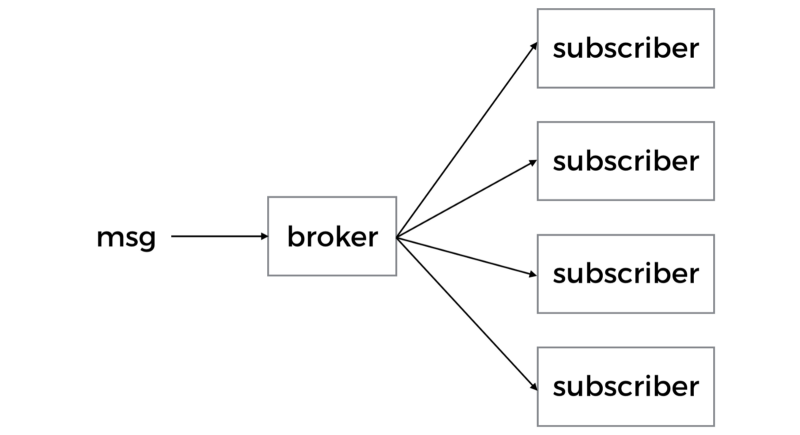

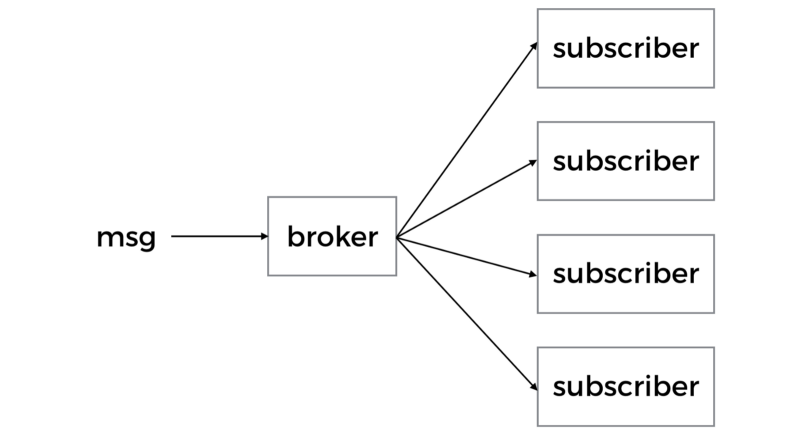

The pub/sub pattern

Pub/Sub is a messaging pattern where publishers and subscribers are decoupled through an intermediary message broker (ZeroMQ, RabbitMQ, SNS, and so on).

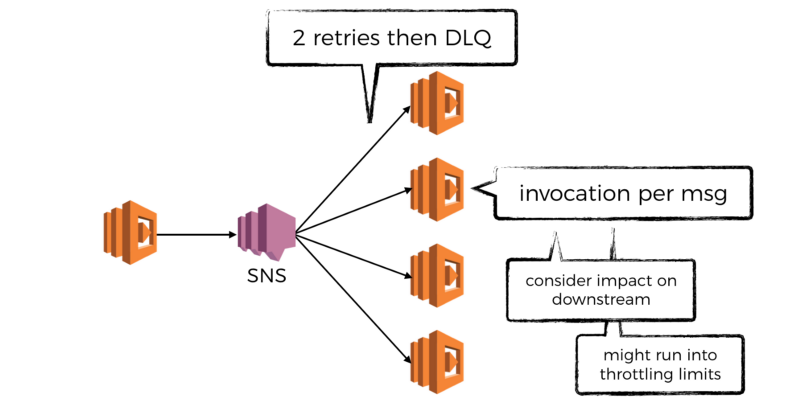

In the AWS ecosystem, the obvious candidate for the broker role is Simple Notification Service (SNS).

SNS will make three attempts for your Lambda function to process a message before sending it to a Dead Letter Queue (DLQ) if a DLQ is specified for the function. However, according to an analysis by the folks at OpsGenie, the number of retries can be as many as six.

Another thing to consider is the degree of parallelism this setup offers. For each message, SNS will create a new invocation of your function. So if you publish 100 messages to SNS, then you can have 100 concurrent executions of the subscribed Lambda function.

This is great if you’re optimising for throughput.

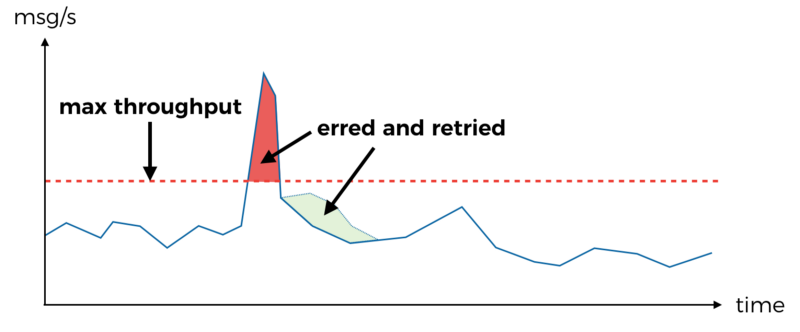

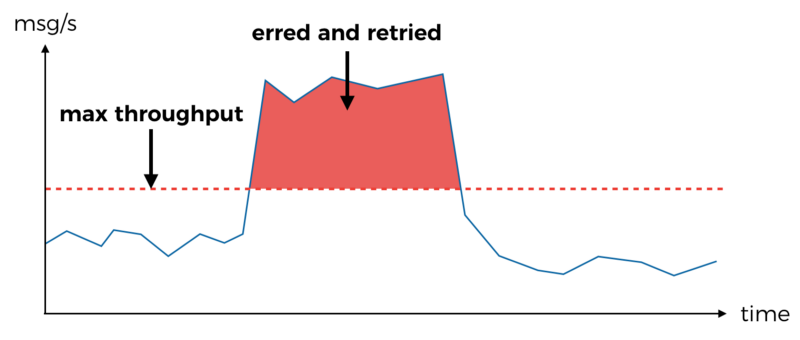

However, we’re often constrained by the max throughput our downstream dependencies can handle — databases, S3, internal/external services, and so on.

If the burst in throughput is short, then there’s a good chance the retries would be sufficient (there’s a randomized, exponential back off between retries too) and you won’t miss any messages.

If the burst in throughput is sustained over a long period of time, then you can exhaust the max number of retries. At this point you’ll have to rely on the DLQ and possibly human intervention in order to recover the messages that couldn’t be processed the first time around.

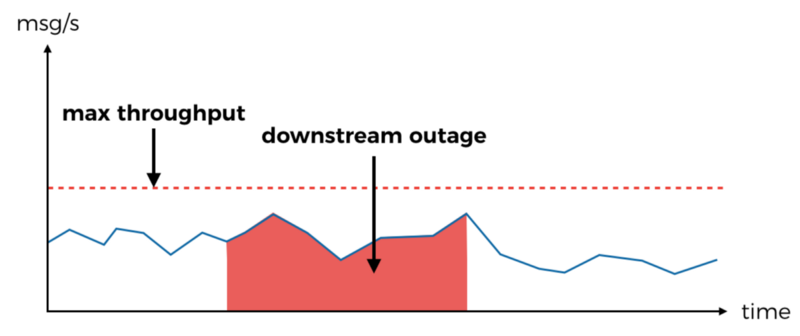

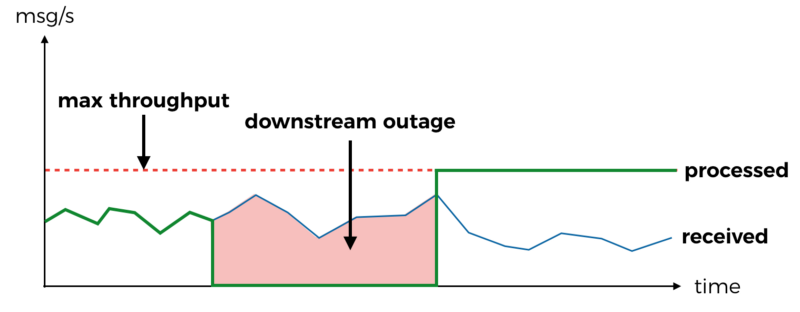

Similarly, if the downstream dependency experiences an outage, then all messages received and retried during the outage are bound to fail.

You can also run into the Lambda limit on the number of concurrent executions in a region. Since this is an account-wide limit, it will also impact your other systems within the account that rely on AWS Lambda: APIs, event processing, cron jobs, and so on.

SNS is also prone to suffer from temporal issues, like bursts in traffic, downstream outage, and so on. Kinesis, on the other hand, deals with these issues much better as described below:

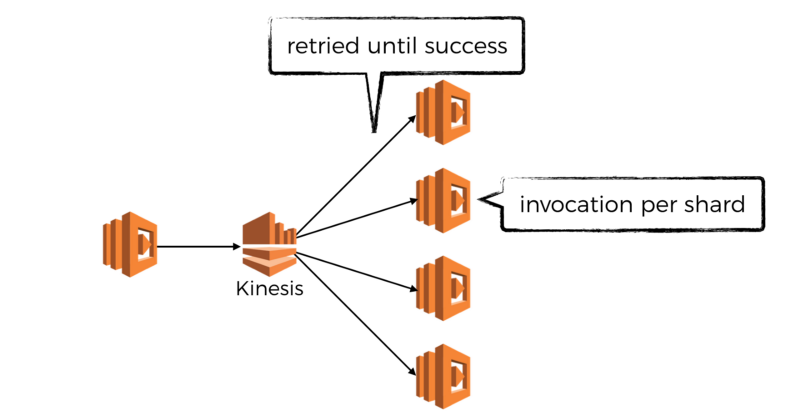

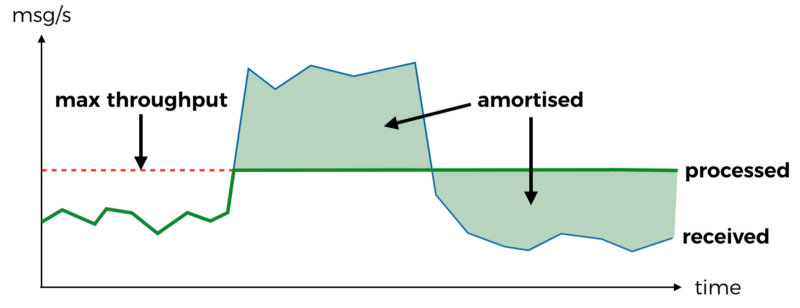

- The degree of parallelism is constrained by the number of shards, which can be used to amortize bursts in the message rate

- Records are retried until success is achieved, unless the outage lasts longer than the retention policy you have on the stream (the default is 24 hours). You will eventually be able to process the records

But Kinesis Streams is not without its own problems. In fact, from my experience using Kinesis Streams with Lambda, I have found a number of caveats that need to be understood in order to make effective use of the service.

You can read about these caveats in another article I wrote here.

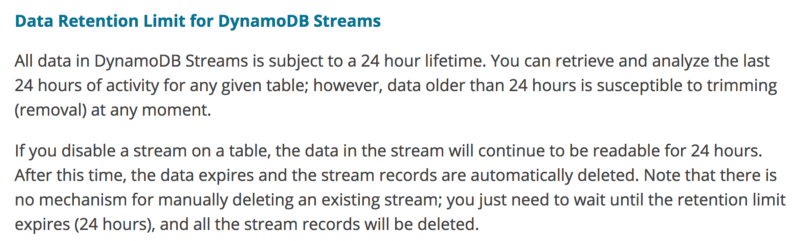

Interestingly, Kinesis Streams is not the only streaming option available on AWS. There is also DynamoDB Streams.

By and large, DynamoDB Streams + Lambda works the same way as Kinesis Streams + Lambda. Operationally, it does have some interesting twists:

- DynamoDB Streams auto scales the number of shards

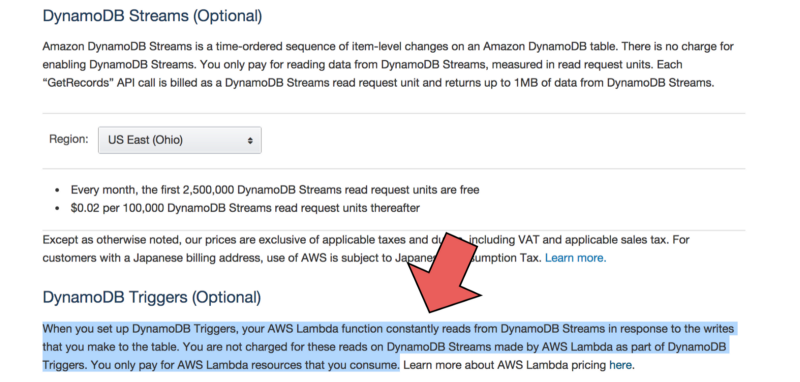

- If you’re processing DynamoDB Streams with AWS Lambda, then you don’t pay for the reads from DynamoDB Streams (but you still pay for the read and write capacity units for the DynamoDB table itself)

- Kinesis Streams offers the option to extend data retention to 7 days, but DynamoDB Streams doesn’t offer such an option

The fact that DynamoDB Streams auto scales the number of shards can be a double-edged sword. On one hand, it eliminates the need for you to manage and scale the stream (or come up with home-baked auto scaling solutions). But on the other hand, it can also diminish the ability to amortize spikes in the load you pass on to downstream systems.

As far as I know, there is no way to limit the number of shards a DynamoDB stream can scale up to, which is something you’d surely consider when implementing your own auto scaling solution.

I think the most pertinent question is, “what is your source of truth?”

Does a row being written in DynamoDB make it canon to the state of your system? This is certainly the case in most N-tier systems that are built around a database, regardless of whether it’s an RDBMS or NoSQL database.

In an event-sourced system where state is modeled as a sequence of events (as opposed to a snapshot), the source of truth might well be the Kinesis stream. For example, as soon as an event is written to the stream, it’s considered canon to the state of the system.

Then, there’re other considerations around cost, auto-scaling, and so on.

From a development point of view, DynamoDB streams also have some limitations and shortcomings:

- Each stream is limited to events from one table

- The records describe DynamoDB events and not events from your domain, which I’ve always felt creates a sense of dissonance when working with these events

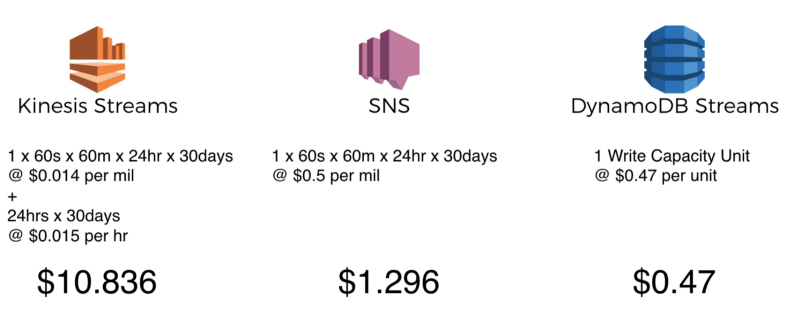

Excluding the cost of Lambda invocations for processing the messages, here are some cost projections for using SNS vs Kinesis Streams vs DynamoDB Streams as the broker. I’m making the assumption that throughput is consistent, and that each message is 1KB in size.

Monthly cost at 1 msg/s

Monthly cost at 1,000 msg/s

These projections should not be taken at face value. For starters, the assumption about a perfectly consistent throughput and message size is unrealistic, and you’ll need some headroom with Kinesis and DynamoDB streams even if you’re not hitting the throttling limits.

That said, what these projections do tell me is that:

- You get an awful lot with each shard in Kinesis streams

- While there’s a baseline cost for using Kinesis streams, the cost goes down when usage scales up as compared to SNS and DynamoDB streams, thanks to the significantly lower cost per million requests

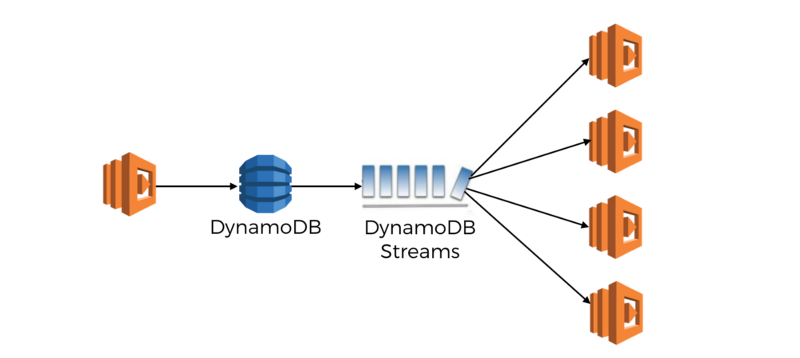

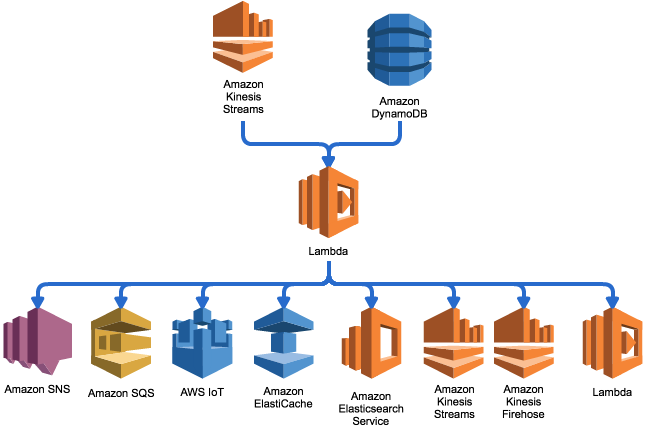

Whilst SNS, Kinesis, and DynamoDB streams are your basic choices for the broker, Lambda functions can also act as brokers in their own right and propagate events to other services.

This is the approach used by the aws-lambda-fanout project from awslabs. It allows you to propagate events from Kinesis and DynamoDB streams to other services that cannot directly subscribe to the three basic choice of brokers (either because of account/region limitations or that they’re just not supported).

While it’s a nice idea and definitely meets some specific needs, it’s worth bearing in mind the extra complexities it introduces, such as handling partial failures, dealing with downstream outages, misconfigurations, and so on.

Conclusion

So what is the best event source for doing pub-sub with AWS Lambda? Like most tech decisions, it depends on the problem you’re trying to solve, and the constraints you’re working with.

In this post, we looked at SNS, Kinesis Streams, and DynamoDB Streams as candidates for the broker role. We walked through a number of scenarios to see how the choice of event source affects scalability, parallelism, and resilience against temporal issues and cost.

You should now have a much better understanding of the tradeoffs between various event sources when working with Lambda.

Until next time!